Weilin Ruan (阮炜霖)

MPhil Student @ HKUST(GZ)

Bachelor of Engineering @ Jinan University

I am a second-year MPhil student in Data Science at the Hong Kong University of Science and Technology (Guangzhou), supervised by Prof. Yuxuan Liang.

My research interests include Time Series Forecasting, Spatio-Temporal Data Mining, Multimodal Learning, Multi-Agent Systems, and Embodied AI.

Education

-

HKUST(GZ) - Information Hub - Data Science and AnalysisSep 2024 - June 2026

HKUST(GZ) - Information Hub - Data Science and AnalysisSep 2024 - June 2026 -

Jinan University - Computer Science Department - Network EngineeringSep 2020 - June 2024

Jinan University - Computer Science Department - Network EngineeringSep 2020 - June 2024

Latest News

-

Paper Acceptance - Two papers accepted to AAAI 2026.Nov 2025

-

Reviewer Appointment - Appointed as a reviewer for ICLR 2026 conference.Sep 2025

-

New Position - Joined Knowin AI Inc. as an Algorithm Researcher.Sep 2025

-

Program Committee - Invited to serve as a Program Committee member for AAAI 2026.July 2025

-

Paper Acceptance - Paper accepted to ICCV 2025 Workshop.July 2025

-

Paper Acceptance - Paper on Traffic Flow Forecasting accepted to IEEE TITS Journal.Aug 2025

-

Paper Acceptance - Paper on Multimodal Building Electricity Loads Forecasting accepted to ACM MM 2025.July 2025

-

Paper Acceptance - Paper on Urban Heat Island Effect Forecasting accepted to KDD 2025.May 2025

-

Paper Acceptance - Paper on Spatio-Temporal Forecasting accepted to ECML-PKDD 2025.May 2025

-

Paper Acceptance - Paper on Multimodal Time Series Forecasting accepted to ICML 2025.May 2025

-

Reviewer Appointment - Appointed as a reviewer for IJCNN and ICASSP conferences.Dec 2024

-

Program Committee - Invited to serve as a Program Committee member for WebST 2025.Jan 2025

-

Outstanding Graduate - Received the undergraduate certificate from Jinan University as an outstanding graduate of the department of Information Science and Technology.June 2024

-

Research Internship - Joined the CityMind team as an intern led by Prof. Yuxuan Liang.Aug 2023

-

RBCC Achievement - Participated in the RBCC offline held in HKUST(GZ) and achieved outstanding camper.July 2023

Pinned Publications

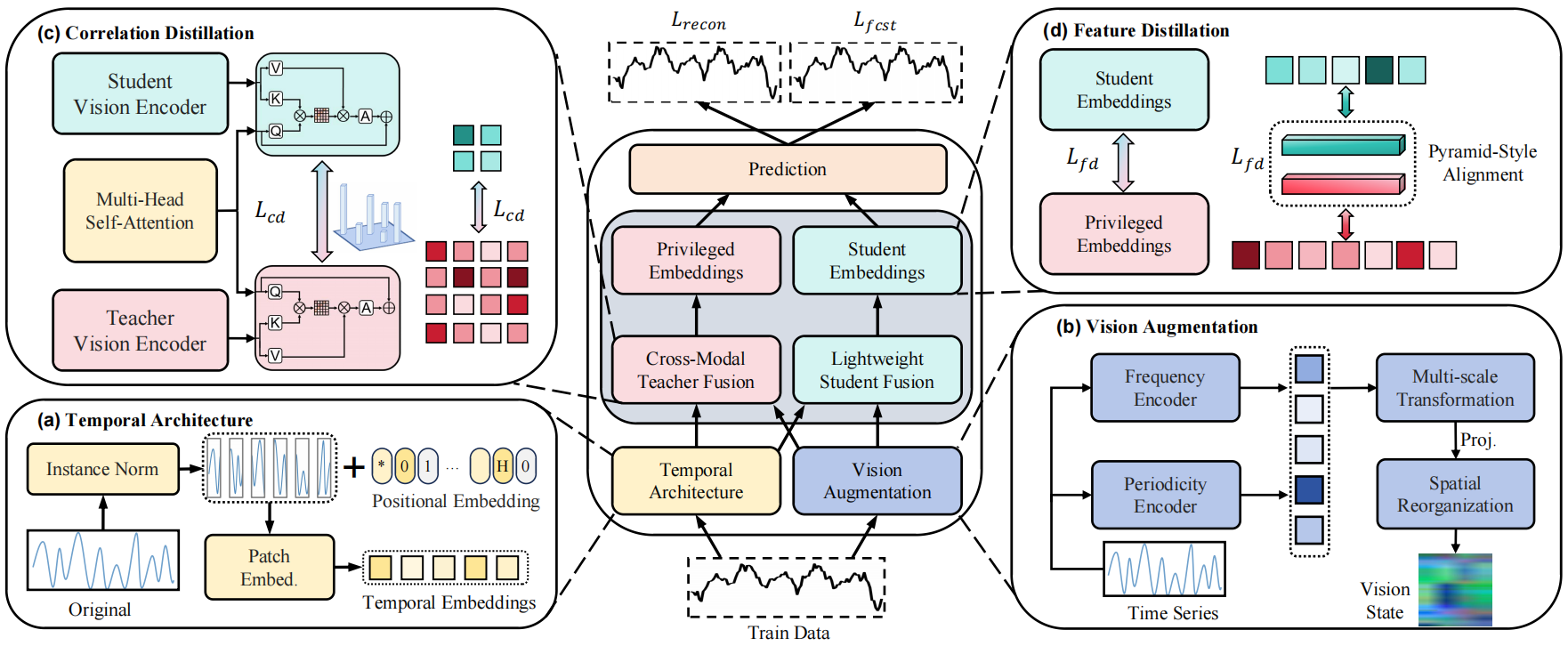

OccamVTS: Distilling Vision Models to 1% Parameters for Time Series Forecasting Pinned

Sisuo Lyu, Siru Zhong, Weilin Ruan, Qingxiang Liu, Qingsong Wen, Hui Xiong, Yuxuan Liang

AAAI. 2026

We propose OccamVTS, a novel framework that distills large vision models to only 1% of their original parameters for efficient time series forecasting, demonstrating that extreme parameter reduction can be achieved while maintaining strong predictive performance through innovative distillation techniques.

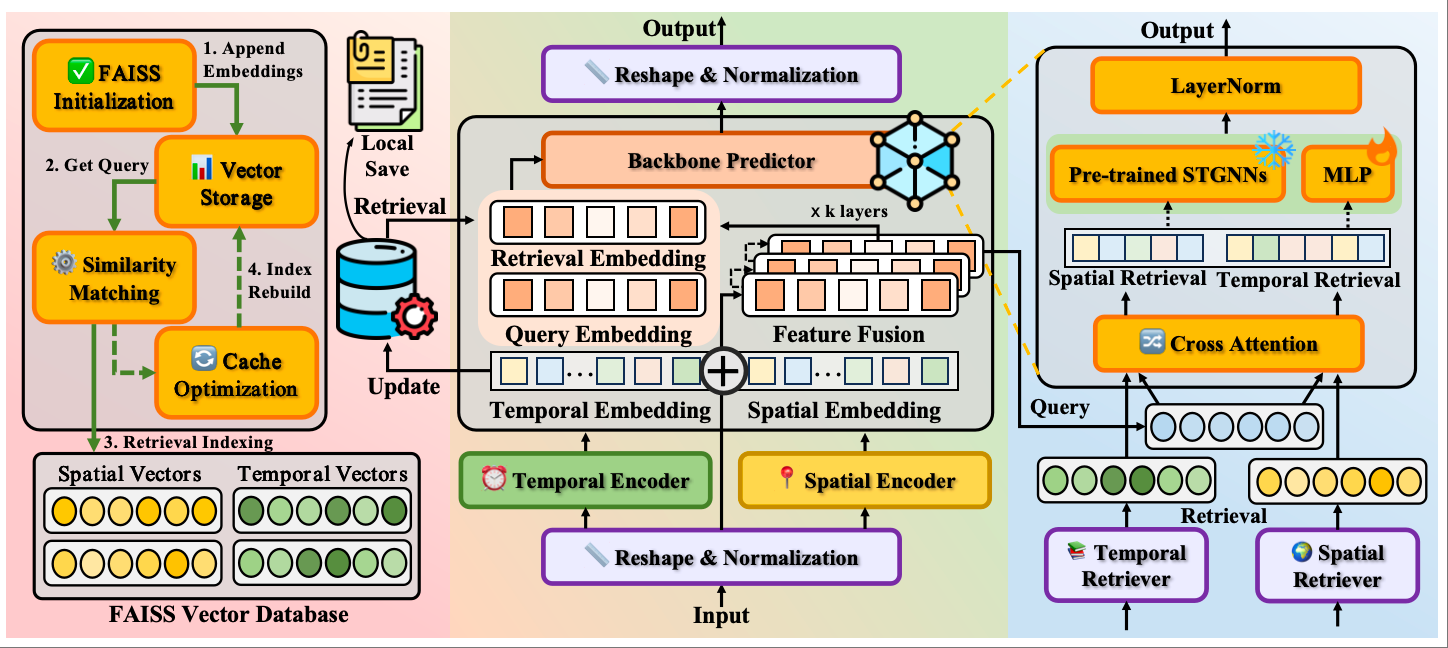

Retrieval Augmented Spatio-Temporal Framework for Traffic Prediction Pinned

Weilin Ruan, Xilin Dang, Ziyu Zhou, Sisuo Lyu, Yuxuan Liang

AAAI. 2026

We propose RAST, a universal framework that integrates retrieval-augmented mechanisms with spatio-temporal modeling to address limited contextual capacity and low predictability in traffic prediction. Our framework consists of three key designs: Decoupled Encoder and Query Generator, Spatio-temporal Retrieval Store and Retrievers, and Universal Backbone Predictor that flexibly accommodates pre-trained STGNNs or simple MLP predictors.

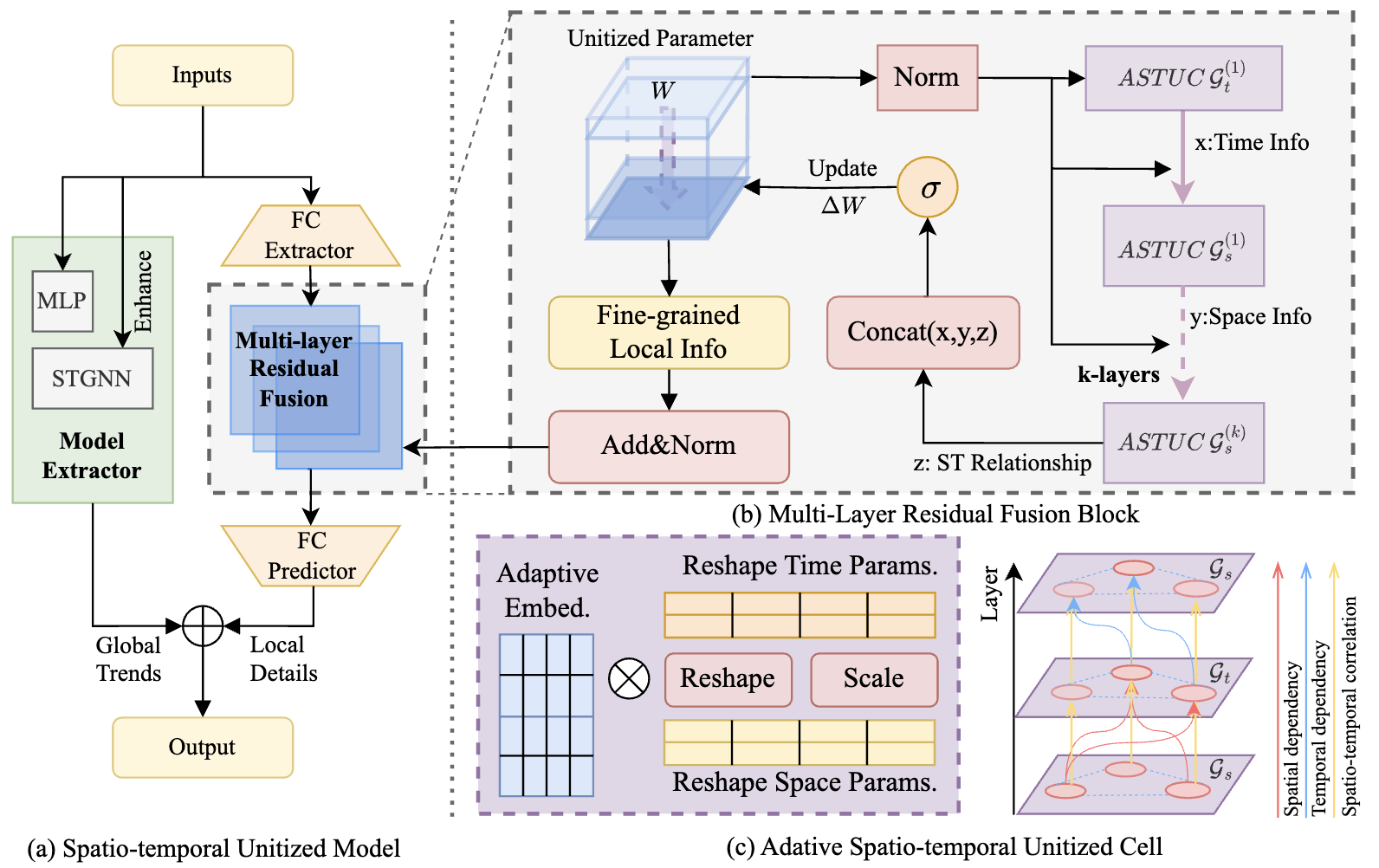

Cross Space and Time: A Spatio-Temporal Unitized Model for Traffic Flow Forecasting Pinned

Weilin Ruan, Wenzhuo Wang, Siru Zhong, Wei Chen, Li Liu, Yuxuan Liang

TITS. 2025

This paper proposes a novel spatio-temporal unitized model for traffic flow forecasting that effectively captures complex dependencies across both space and time dimensions, achieving state-of-the-art performance on multiple benchmark datasets.

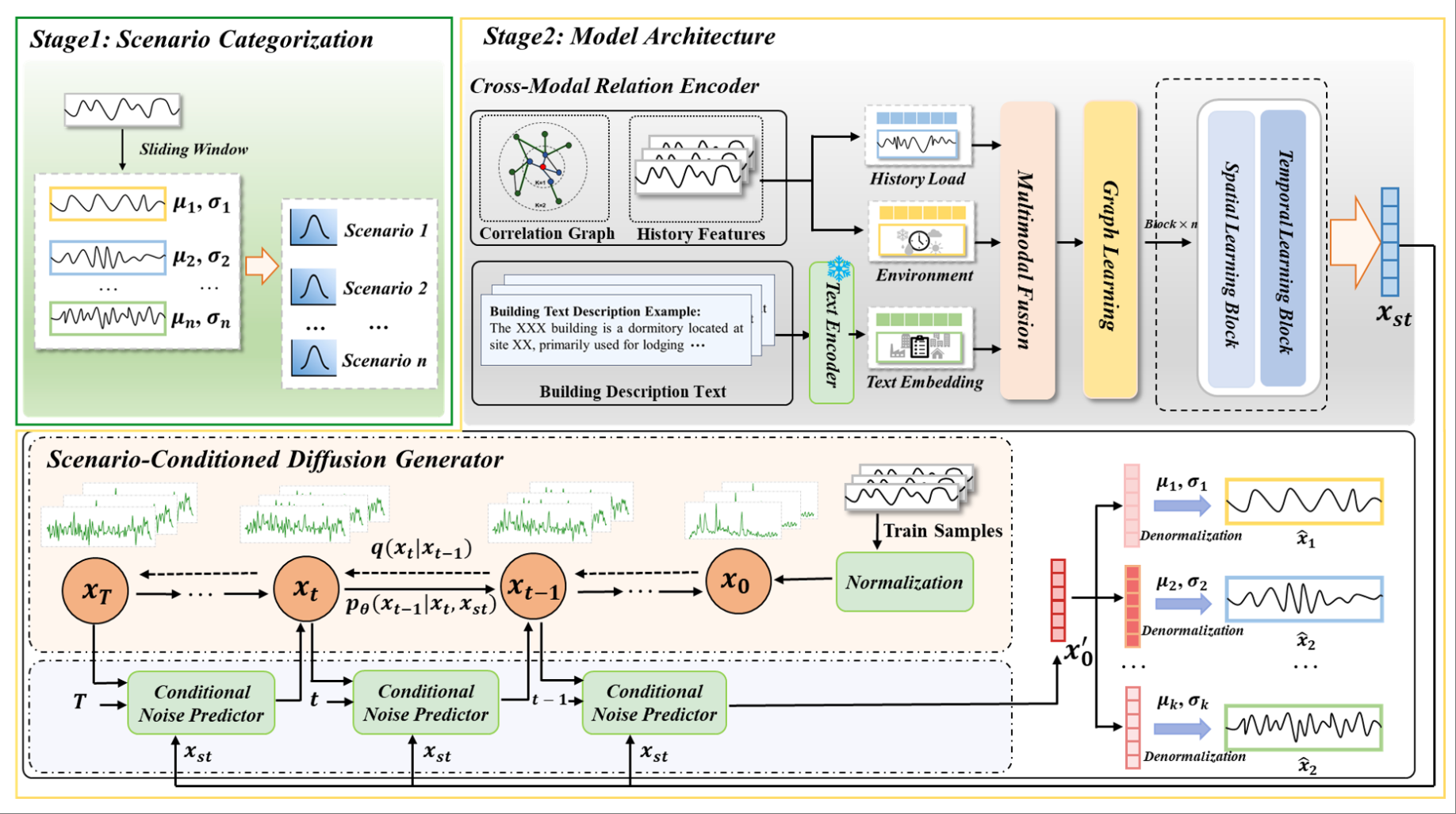

Towards Multi-Scenario Forecasting of Building Electricity Loads with Multimodal Data Pinned

Yongzheng Liu, Siru Zhong, Gefeng Luo, Weilin Ruan, Yuxuan Liang

ACM MM. 2025

We propose MMLoad, a novel diffusion-based multimodal framework for multi-scenario building load forecasting with three innovations: Multimodal Data Enhancement Pipeline, Cross-modal Relation Encoder, and Scenario-Conditioned Diffusion Generator with uncertainty quantification, establishing a new paradigm for multimodal learning in smart energy systems.

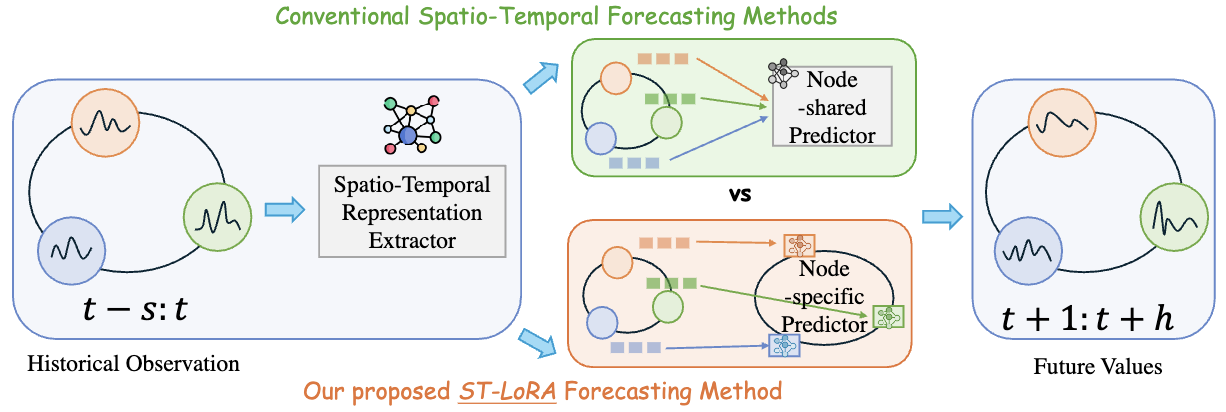

Low-rank Adaptation for Spatio-Temporal Forecasting Pinned

Weilin Ruan, Wei Chen, Xilin Dang, Jianxiang Zhou, Weichuang Li, Xu Liu, Yuxuan Liang

ECML. 2025

This paper presents ST-LoRA, a novel low-rank adaptation framework as an off-the-shelf plugin for existing spatial-temporal prediction models, which alleviates node heterogeneity problems through node-level adjustments while minimally increasing parameters and training time.

Selected Awards

-

Outstanding Graduates - Jinan UniversityFall 2024

-

Golden Arowana Scholarship - Jinan UniversityFall 2023

-

Bronze Medal - ICPC International Collegiate Programming ContestFall 2022